In 2015, starfish, octopus, crabs and other Pacific Ocean life stumbled upon a temporary addition to the seafloor, more than half a mile from the shoreline: a 38,000-pound container. But in the ocean, 10 feet by 7 feet is quite small. The shrimp exploring the seafloor made more noise than the datacenter inside the container, which consumed computing power equivalent to 300 desktop PCs.

But the knowledge gained from the three months this vessel was underwater could help make future datacenters more sustainable, while at the same time speeding data transmission and cloud deployment. And yes, maybe even someday, datacenters could become commonplace in seas around the world.

The technology to put sealed vessels underwater with computers inside isn’t new. In fact, it was one Microsoft employee’s experience serving on submarines that carry sophisticated equipment that got the ball rolling on this project. But Microsoft researchers do believe this is the first time a datacenter has been deployed below the ocean’s surface. Going under water could solve several problems by introducing a new power source, greatly reducing cooling costs, closing the distance to connected populations and making it easier and faster to set up datacenters.

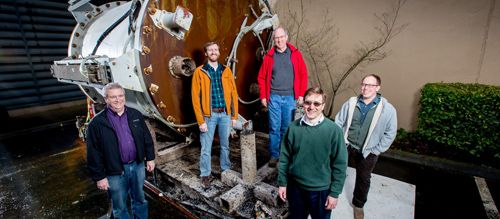

Microsoft Project Natick team: (L-R): Eric Peterson, Spencer Fowers, Norm Whitaker, Ben Cutler and Jeff Kramer (Photo by Scott Eklund/Red Box Pictures.)

A little background gives context for what led to the creation of the vessel. Datacenters are the backbone of cloud computing, and contain groups of networked computers that require a lot of power for all kinds of tasks: storing, processing and/or distributing massive amounts of information. The electricity that powers datacenters can be generated from renewable power sources such as wind and solar, or, in this case, perhaps wave or tidal power. When datacenters are closer to where people live and work, there is less “latency,” which means that downloads, Web browsing and games are all faster. With more and more organizations relying on the cloud, the demand for datacenters is higher than ever – as is the cost to build and maintain them.

All this combines to form the type of challenge that appeals to Microsoft Research teams who are experts at exploring out-of-the-box solutions.

Ben Cutler, the project manager who led the team behind this experiment, dubbed Project Natick, is part of a group within Microsoft Research that focuses on special projects. “We take a big whack at big problems, on a short-term basis. We take a look at something from a new angle, a different perspective, with a willingness to challenge conventional wisdom. So when a paper about putting datacenters in the water landed in front of Norm Whitaker, who heads special projects for Microsoft Research NExT, it caught his eye.

“We’re a small group, and we look at moonshot projects,” Whitaker says. The paper came out of ThinkWeek, an event that encourages employees to share ideas that could be transformative to the company. “As we started exploring the space, it started to make more and more sense. We had a mind-bending challenge, but also a chance to push boundaries.”

One of the paper’s authors, Sean James, had served in the Navy for three years on submarines. “I had no idea how receptive people would be to the idea. It’s blown me away,” says James, who has worked on Microsoft datacenters for the past 15 years, from cabling and racking servers to his current role as senior research program manager for the Datacenter Advanced Development team within Microsoft Cloud Infrastructure & Operations. “What helped me bridge the gap between datacenters and underwater is that I’d seen how you can put sophisticated electronics under water, and keep it shielded from salt water. It goes through a very rigorous testing and design process. So I knew there was a way to do that.”

James recalled the century-old history of cables in oceans, evolving to today’s fiber optics found all over the world.

“When I see all of that, I see a real opportunity that this could work,” James says. “In my experience the trick to innovating is not coming up with something brand new, but connecting things we’ve never connected before, pairing different technology together.”

Building on James’s original idea, Whitaker and Cutler went about connecting the dots.

Cutler’s small team applied science and engineering to the concept. A big challenge involved people. People keep datacenters running. But people take up space. They need oxygen, a comfortable environment and light. They need to go home at the end of the day. When they’re involved you have to think about things like landscaping and security.

So the team moved to the idea of a “lights out” situation. A very simple place to house the datacenter, very compact and completely self-sustaining. And again, drawing from the submarine example, they chose a round container. “Nature attacks edges and sharp angles, and it’s the best shape for resisting pressure,” Cutler says. That set the team down the path of trying to figure out how to make a datacenter that didn’t need constant, hands-on supervision.

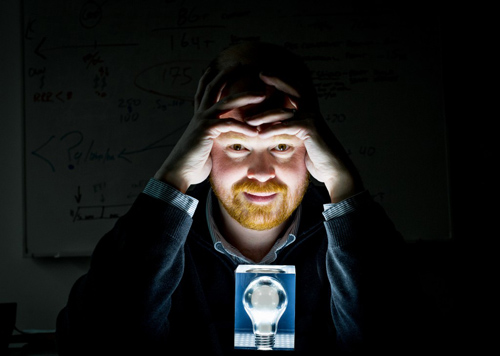

Sean James of Cloud Infrastructure & Operations team. (Photography by Scott Eklund/Red Box Pictures)

This initial test vessel wouldn’t be too far off-shore, so they could hook into an existing electrical grid, but being in the water raised an entirely new possibility: using the hydrokinetic energy from waves or tides for computing power. This could make datacenters work independently of existing energy sources, located closer to coastal cities, powered by renewable ocean energy.

That’s one of the big advantages of the underwater datacenter scheme – reducing latency by closing the distance to populations and thereby speeding data transmission. Half of the world’s population, Cutler says, lives within 120 miles of the sea, which makes it an appealing option.

This project also shows it’s possible to deploy datacenters faster, turning it from a construction project – which require permits and other time-consuming aspects – to a manufacturing one. Building the vessel that housed the experimental datacenter only took 90 days. While every datacenter on land is different and needs to be tailored to varying environments and terrains, these underwater containers could be mass produced for very similar conditions underwater, which is consistently colder the deeper it is.

Cooling is an important aspect of datacenters, which normally run up substantial costs operating chiller plants and the like to keep the computers inside from overheating. The cold environment of the deep seas automatically makes datacenters less costly and more energy efficient.

Once the vessel was submerged last August, the researchers monitored the container from their offices in Building 99 on Microsoft’s Redmond campus. Using cameras and other sensors, they recorded data like temperature, humidity, the amount of power being used for the system, even the speed of the current.

“The bottom line is that in one day this thing was deployed, hooked up and running. Then everyone is back here, controlling it remotely,” Whitaker says. “A wild ocean adventure turned out to be a regular day at the office.”

A diver would go down once a month to check on the vessel, but otherwise the team was able to stay constantly connected to it remotely – even after they observed a small tsunami wave pass.

The team is still analyzing data from the experiment, but so far, the results are promising.

“This is speculative technology, in the sense that if it turns out to be a good idea, it will instantly change the economics of this business,” says Whitaker. “There are lots of moving parts, lots of planning that goes into this. This is more a tool that we can make available to datacenter partners. In a difficult situation, they could turn to this and use it.”

Christian Belady, general manager for datacenter strategy, planning and development at Microsoft, shares the notion that this kind of project is valuable for the research gained during the experiment. It will yield results, even if underwater datacenters don’t start rolling off assembly lines anytime soon.

“While at first I was skeptical with a lot of questions. What were the cost? How do we power? How do we connect? However, at the end of the day, I enjoy seeing people push limits.” Belady says. “The reality is that we always need to be pushing limits and try things out. The learnings we get from this are invaluable and will in some way manifest into future designs.”

Belady, who came to Microsoft from HP in 2007, is always focused on driving efficiency in datacenters – it’s a deep passion for him. It takes a couple of years to develop a datacenter, but it’s a business that changes hourly, he says, with demands that change daily.

“You have to predict two years in advance what’s going to happen in the business,” he says.

Belady’s team has succeeded in making datacenters more efficient than they’ve ever been. He founded an industry metric, power usage effectiveness (PUE), and in that regard, Microsoft is leading the industry. Datacenters are also using next-generation fuel cells – something James helped develop – and wind power projects like Keechi in Texas to improve sustainability through alternative power sources. Datacenters have also evolved to save energy by using outside air instead of refrigeration systems to control temperatures inside. Water consumption has also gone down over the years.

Belady, who says he “loved” this project, says he can see its potential as a solution for latency and quick deployments.

“But what was really interesting to me, what really surprised me, was to see how animal life was starting to inhabit the system,” Belady says. “No one really thought about that.”

Whitaker found it “really edifying” to see the sea life crawling on the vessel, and how quickly it became part of the environment.

“You think it might disrupt the ecosystem, but really, it’s just a tiny drop in an ocean of activity,” he says.

The team is currently planning the project’s next phase, which could include a vessel four times the size of the current container with as much as 20 times the compute power. The team is also evaluating test sites for the vessel, which could be in the water for at least a year, deployed with a renewable ocean energy source.

Meanwhile, the initial vessel is now back on land, sitting in the lot of one of Microsoft’s buildings. But it’s the gift that keeps giving.

“We’re learning how to reconfigure firmware and drivers for disk drives, to get longer life out of them. We’re managing power, learning more about using less. These lessons will translate to better ways to operate our datacenters. Even if we never do this on a bigger scale, we’re learning so many lessons,” says Peter Lee, corporate vice president of Microsoft Research NExT. “One of the things that’s so fun about a CEO like Satya Nadella is that he’s hard-nosed business savvy, customer obsessed, but another half of this brain is a dreamer who loves moonshots. When I see something like Natick, you could say it’s a moonshot, but not one completely divorced from Microsoft’s core business. I’m really tickled by it. It really perfectly fits the left brain/right brain combination we have right now in the company.”

– By Athima Chansanchai

*Source: Microsoft

Learn more about Project Natick.